| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | ||||

| 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 18 | 19 | 20 | 21 | 22 | 23 | 24 |

| 25 | 26 | 27 | 28 | 29 | 30 | 31 |

Tags

- TensorFlow

- 시간초과

- 모두를 위한 머신러닝

- 백준

- mysql

- Linear Regression

- sort

- 알고리즘 고득점 kit

- Programmers

- softmax

- BOJ

- deque

- DFS

- join

- ML

- 프로그래머스

- 모두를 위한 딥러닝

- Neural Network

- c++

- Queue

- PIR

- 한화오션

- stl

- sung kim

- CSAP

- 정렬

- 큐

- SQL

- deep learning

- Machine learning

Archives

- Today

- Total

hello, world!

[ML lab 11-2] MNIST 99% with CNN 본문

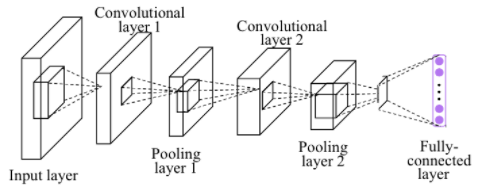

[Simple CNN]

import numpy as np

import tensorflow as tf

import random

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_test = x_test / 255

x_train = x_train / 255

x_train = x_train.reshape(x_train.shape[0], 28, 28, 1)

x_test = x_test.reshape(x_test.shape[0], 28, 28, 1)

# one hot encode y data

y_train = tf.keras.utils.to_categorical(y_train, 10)

y_test = tf.keras.utils.to_categorical(y_test, 10)

# hyper parameters

learning_rate = 0.001

training_epochs = 12

batch_size = 128

tf.model = tf.keras.Sequential()

# L1

tf.model.add(tf.keras.layers.Conv2D(filters=16, kernel_size=(3, 3), input_shape=(28, 28, 1), activation='relu'))

tf.model.add(tf.keras.layers.MaxPooling2D(pool_size=(2, 2)))

# L2

tf.model.add(tf.keras.layers.Conv2D(filters=32, kernel_size=(3, 3), activation='relu'))

tf.model.add(tf.keras.layers.MaxPooling2D(pool_size=(2, 2)))

# L3 fully connected

tf.model.add(tf.keras.layers.Flatten())

tf.model.add(tf.keras.layers.Dense(units=10, kernel_initializer='glorot_normal', activation='softmax'))

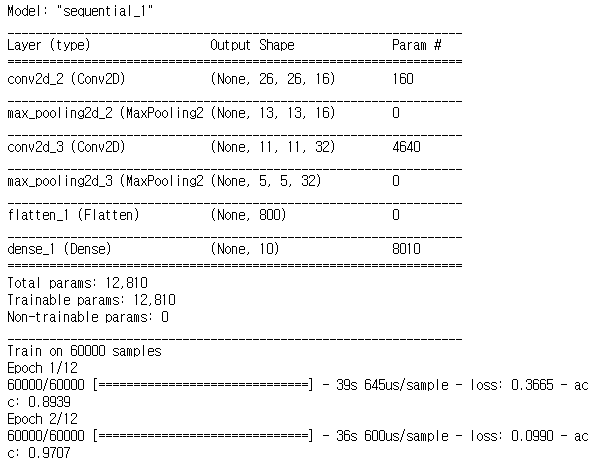

tf.model.compile(loss='categorical_crossentropy', optimizer=tf.keras.optimizers.Adam(lr=learning_rate), metrics=['accuracy'])

tf.model.summary()

tf.model.fit(x_train, y_train, batch_size=batch_size, epochs=training_epochs)

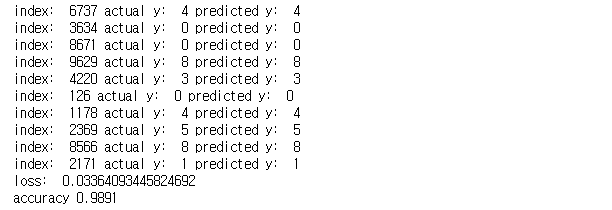

# predict 10 random hand-writing data

y_predicted = tf.model.predict(x_test)

for x in range(0, 10):

random_index = random.randint(0, x_test.shape[0]-1)

print("index: ", random_index,

"actual y: ", np.argmax(y_test[random_index]),

"predicted y: ", np.argmax(y_predicted[random_index]))

evaluation = tf.model.evaluate(x_test, y_test)

print('loss: ', evaluation[0])

print('accuracy', evaluation[1])[출력]

. . .

CNN으로 학습시키면 정확도가 98.9% 정도까지 향상된다.

[Deep CNN]

dropout과 layer 수를 더 추가하면 더 정확도 높은 머신을 구현할 수 있다.

'AI > 모두를 위한 ML (SungKim)' 카테고리의 다른 글

| [ML lab 11-1] Tensorflow CNN Basics (0) | 2021.02.24 |

|---|---|

| [ML lab 10] NN, ReLu, Xavier, Dropout, and Adam (0) | 2021.02.24 |

| [ML lab 09-2] Tensorboard (Neural Net for XOR) (0) | 2021.02.21 |

| [ML lab 09-1] Neural Net for XOR (0) | 2021.02.21 |

| [ML lab 08] Tensor Manipulation (0) | 2021.02.20 |

Comments