AI/모두를 위한 ML (SungKim)

[ML lab 04-1] multi-variable linear regression을 TensorFlow에서 구현하기

ferozsun

2021. 2. 16. 11:42

[multi-variable]

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

x1_data = [73., 93., 89., 96., 73.]

x2_data = [80., 88., 91., 98., 66.]

x3_data = [75., 93., 90., 100., 70.]

y_data = [152., 185., 180., 196., 142.]

# placeholders for a tensor that will be always fed.

x1 = tf.placeholder(tf.float32)

x2 = tf.placeholder(tf.float32)

x3 = tf.placeholder(tf.float32)

Y = tf.placeholder(tf.float32)

w1 = tf.Variable(tf.random_normal([1]), name = 'weight1')

w2 = tf.Variable(tf.random_normal([1]), name = 'weight2')

w3 = tf.Variable(tf.random_normal([1]), name = 'weight3')

b = tf.Variable(tf.random_normal([1]), name = 'bias')

# Hypothesis

hypothesis = x1*w1 + x2*w2 + x3*w3 + b

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - Y))

# Minimize. Need a very small Learnig rate for this data set

optimizer = tf.train.GradientDescentOptimizer(learning_rate = 1e-5)

train = optimizer.minimize(cost)

# Launch the graph in a session

sess = tf.Session()

# Initializes global variables in the graph

sess.run(tf.global_variables_initializer())

for step in range(2001):

cost_val, hy_val, _ = sess.run([cost, hypothesis, train],

feed_dict = {x1: x1_data, x2: x2_data, x3: x3_data, Y: y_data})

if step % 10 == 0:

print(step, "Cost: ", cost_val, "\nPrediction:\n", hy_val)instance가 늘어날수록 코드는 길고 복잡해진다.

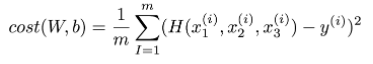

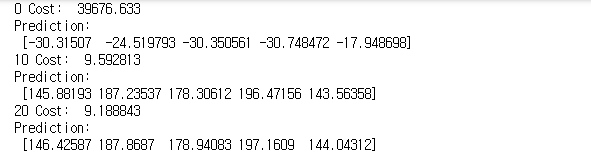

[출력]

y에 대한 예측이 점차 [152., 185., 180., 196., 142.]에 가까워 진다.

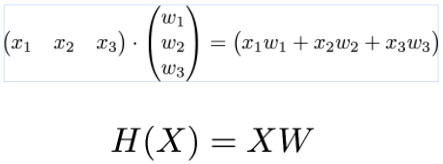

Matrix를 이용하는 방법

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

x_data = [[73., 80., 75.], [93., 88., 93.],

[89., 91., 90.], [96., 98., 100], [73., 55., 70.]]

y_data = [[152.], [185], [180.], [196.], [142.]]

# placeholders for a tensor that will be always fed.

X = tf.placeholder(tf.float32, shape = [None, 3]) # 행은 n개, element는 3개

Y = tf.placeholder(tf.float32, shape = [None, 1]) # 행은 n개, element는 1개

W = tf.Variable(tf.random_normal([3, 1]), name = 'weight')

b = tf.Variable(tf.random_normal([1]), name = 'bias')

# Hypothesis

hypothesis = tf.matmul(X, W) + b

# cost/loss function

cost = tf.reduce_mean(tf.square(hypothesis - Y))

# Minimize. Need a very small Learnig rate for this data set

optimizer = tf.train.GradientDescentOptimizer(learning_rate = 1e-5)

train = optimizer.minimize(cost)

# Launch the graph in a session

sess = tf.Session()

# Initializes global variables in the graph

sess.run(tf.global_variables_initializer())

for step in range(2001):

cost_val, hy_val, _ = sess.run([cost, hypothesis, train],

feed_dict = {X: x_data, Y: y_data})

if step % 10 == 0:

print(step, "Cost: ", cost_val, "\nPrediction:\n", hy_val)matrix를 사용하면 variable의 개수에 상관없이 쉽게 구현할 수 있다는 장점이 있다.

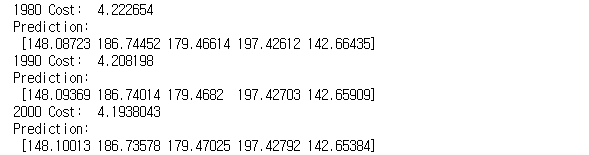

[출력]